Protecting the data ignorant and vulnerable from unethical bastards

Reports about groups such as Cambridge Analytica inappropriately using Facebook data, privacy breaches and psychological manipulation to influence the 2017 US Election have sent the world in a spin. Unethical use of private data has resulted in a class action being filed against Facebook in recent days claiming the social media giant made ‘false and misleading’ statements about how it handles the personal data of its users.Facebook data was allegedly collected by Cambridge Analytica to deeply understand around 50 Million people, and provide misinformation and undue influence to skew vulnerable and undecided voters away from Hilary Clinton towards Donald Trump. CA took Facebook ad targeting to the unethical extreme.

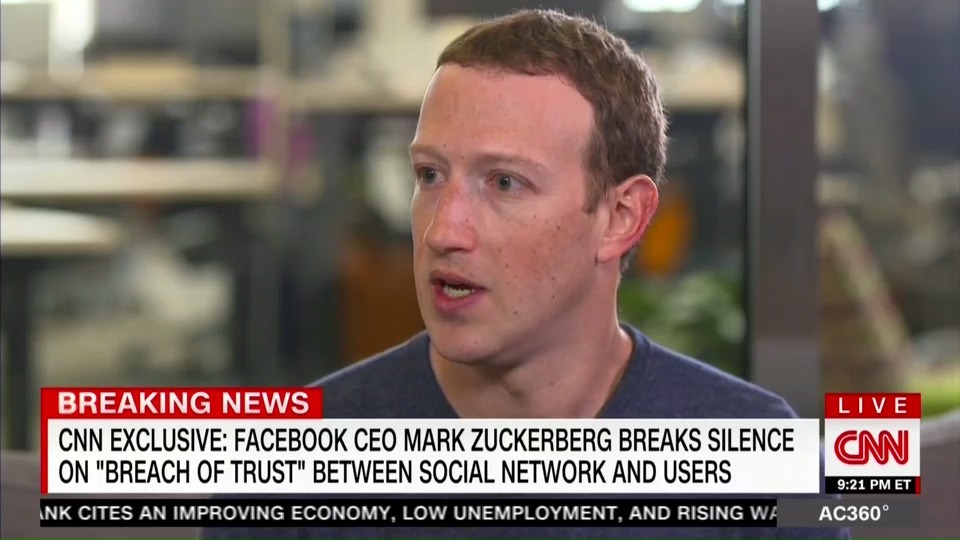

Mark Zuckerberg yesterday released a statement via his Facebook account …

“We have a responsibility to protect your data, and if we can’t then we don’t deserve to serve you. I’ve been working to understand exactly what happened and how to make sure this doesn’t happen again. The good news is that the most important actions to prevent this from happening again today we have already taken years ago. But we also made mistakes, there’s more to do, and we need to step up and do it.” Read full rather lengthy post here

Zuckerberg goes on to say more specifically in relation to Cambridge Analytica that “This was a breach of trust between Kogan, Cambridge Analytica and Facebook. But it was also a breach of trust between Facebook and the people who share their data with us and expect us to protect it. We need to fix that.”

So, what actually happened? Ex-director whistleblower Christopher Wylie revealed in 2017 that the executives of Cambridge Analytica systematically and knowingly ran campaigns based on psychological and personality profiles mined from the Facebook data. In 2013, a Cambridge University researcher named Aleksandr Kogan created a personality quiz app downloaded by around 300,000 people. The way Facebook worked at the time, this allowed for the data of not only those using the App but their friends.

“We exploited Facebook to harvest millions of people’s profiles. And built models to exploit what we knew about them and target their inner demons. That was the basis the entire company was built on.” More here

This is a complex matter, and in my mind is less concerning than illuminating. The issue of privacy is now front and centre.

Our ongoing consumer research illustrates the convenience, portability and other benefits of digital applications such as Facebook create a level of blind faith and naivety as to the importance of data privacy, user rights, how data is used and what this means to the individual.

Most people do not think about the data they share via social media, banking and other large corporate and government, as there is a general level of trust that there are adequate laws and protections, and that by and large there is nothing to worry about, as organisations are assumed to be ethical. While there are growing levels of distrust across the community, taking proper security measures is critical in slowing the decay.

Critical in this discussion is that this isn’t so much a data issue, but how the data was used. Cambridge used the data records of 50 Million Americans to have a premeditated psychological influence by false Facebook ‘advertising.’ Posts were targeted at potential voters precisely targeting their vulnerabilities.

There is currently a responsibility placed with Facebook users to protect themselves, to take necessary steps to best manage their privacy, and to be intelligent and not be gullible in what they see, read or hear. Feedback from those involved in our research illustrate some people highly untrusting of media and others anxious about what they can believe.

“You discriminate daily in your personal conversations. You need to discriminate the media as well and people have lost that ability because of the amount of media that’s been forced upon us.”

However, as recommended by Christopher Wylie the Cambridge Analytica whistleblower, it is important to go through life with a level of caution:

“I go through life with a healthy dose of skepticism. And, I think that healthy dose of skepticism as to what you are seeing and what you are hearing and who you are talking to, is the best way to go through life.” Christopher Wylie

There is an expectation that government is there to set protections and enforce data privacy laws, and that businesses are working to the highest ethical and legal standards. In cases such as Cambridge Analytica they claim to have believed they played within the law and their rights, even if at times there were highly questionable unethical practices. Too often ‘entrepreneurs’ balance the financial reward against the risk of any inappropriate behaviour.

Care is taken to profit from working within what is possible without being caught. They justify questionable ethical behaviours as normal, acceptable or necessary to achieve objectives. CA executives have been caught on camera discussing their unethical practices, and Facebook has long been viewed as sloppy in its protection of user privacy. Such media outbreaks simply confirm the skeptic reservations.

The problem is that assumption can be made that the world is idealistically ethical and good. Yet, without strict and enforced protections beyond self-regulation, it is too easy to slip on privacy as businesses work to survive, prosper and meet financial and other strategic targets. Privacy is too often a second or tertiary priority, if that.

“The good news here is that the big actions that we needed to take to prevent this from happening today we took three or four years ago. But had we taken them five or six years ago, we wouldn’t be here right now. So I do think early on the platform we had this very idealistic vision around how data portability would allow all these different new experiences, and I think the feedback that we’ve gotten from our community and from the world is that privacy and having the data locked down is more important to people than maybe making it easier to bring more data and have different kinds of experiences. And I think if we’d internalized that sooner and had made these changes that we made in 2014 in, say, 2012 or 2010 then I also think we could have avoided a lot of harm.” Mark Zuckerberg WIRED interview this week

https://https://www.youtube.com/watch?v=v18dGTpzUCM

Businesses such as Facebook often prefer to be self-regulated, but this can be tricky, and there are many examples of how the fast growth juggernaut has profited from questionable data selling, not overly different to Cambridge Analytica. It is easy to respond with an apology and plan when forced with a class-action, large share price drop and negative media and user conversation. The previous three trading sessions for Facebook, wiped out nearly $45 billion in stock value (more here).

Yesterday, Mark Zuckerburg promised that Facebook is “committed to getting it right moving forward.” While this is a fine pursuit, it also indicates previous gaps in privacy protection in the focus of more pressing innovation.

Bad behaviour, eventually catches up, even if justified to oneself as normal or ethical (aka the Rolf Harris Effect). Poor corporate citizens can only be unethical and hide for so long, before being discover and shamed.

It is important to recognise that data breaches do not need to be to the magnitude of Facebook and CA to still be questionable. Over my 25 years collecting consumer data on behalf of corporate and government organisations it is too often privacy is at level of low literacy and competency within organisations, and often not taken seriously. There is a high level of nativity as to the seriousness and requirements of the Privacy Act in Australia at all levels of organisations.

Too often I have found myself and others on our team needing to take a firm stand against otherwise seemingly reputable organisations seeking to collect, analyse and store consumer data that would contravene ethical and even legal requirements. The desire for CEOs, senior management or the sales team to identify people and use data in ways that should be obviously questionable is common. Generally speaking showing exerts from legistlation and codes of conduct are sufficient, yet even then, a firmer stand or decision to not work together can be the only solution. Most likely intentions are good, but business growth and other strategic considerations can trump ethics and privacy risks.

The market and social research industry has long worked to the highest legal and ethical standards in data privacy (e.g. AMSRO, AMSRS and ESOMAR). In Australia, this includes professional code of conduct, the The Privacy (Market and Social Research) Code 2014, ISO 20252 market, opinion and social research and AS: ISO 26362 access panels in market, opinion and social research. You can find a list of research companies working to the highest privacy, ethical and quality standards in Australia, including Square Holes, here.

“The Notifiable Data Breaches (NDB) scheme is an amendment to the existing Privacy Act. The NDB scheme introduces mandatory data breach notification obligations. The scheme will also toughen up privacy obligations by companies working with personal information with considerable fines (of up to $1.7 million) for a privacy breach.” Association of Market & Social Organisations

Data breaches are quite common in the US, UK and globally. In 2017 brands as diverse as Xbox, Dun & Bradstreet, Gmail and Kmart all seriously breached data.

“In 2016, reported data breaches increased by 40%. Yahoo also announced the largest data breach in history last year, affecting more than one billion accounts. What will 2017 hold? We’re hoping for the best, but you may just see 2017 data breaches get even more messy and serious.” 2017 Data Breaches – The Worst, So Far

Or, check out the handy Wikipedia ‘List of data breaches’ here.

There are fines associated with data breaches, yet the question comes back to whether this is just viewed as part of the risk-return balance of running a business. ‘How far can we push data privacy laws, without being caught, and even if we do it will soon be forgotten, and the fines are likely only tiny compared with the profit and other benefits from focusing on growth and innovation over privacy protection.’ This is certainly a likely management discussion with the recent CA – Facebook infringement. Do legal consequences need to be much, much higher?

Critical in all of this is that as a community we do not become paranoid and hysteric about this. The world is not in total decay and by and large our privacy is likely respected and treated appropriately by our corporate and government enterprises. In saying this, such major breaches of trust bring rectification to the fore, and we realise that self-regulation is nowhere near sufficient.

It is critical that laws and regulations protect individuals, particularly the ignorant and vulnerable, and that the risk of breaches and harsh enforcement needs to discourage and hopefully remove unethical profiteering bastards.

While social media has had many strengths, in creating new communities and removing isolation of some people, there is potential to for abuse. In saying this, the new frontier is likely to be how to ethically harvest and use the vast amount of deep consumer data in a legal and ethical manner to assist with planning a better future. Rather than using the data for evil and manipulation, to develop tools to drive positive social and cultural change.

Much of this is likely comes back to empowering and protecting the rights of users of Facebook and other social and digital platforms. Making it easy to adjust their privacy settings and even lock their data. Clearly Facebook has and is putting such systems and protections in place.

Ultimately empowering the user is key. Over recent years there is increasing conversation about individuals owning their data, and the need for systems and regulations to better facilitate this. Perhaps our next wave of innovation.

And, legislative work is already moving towards this in Australia.

“The Federal Government has announced plans to legislate a national ‘Consumer Data Right’ bill, which will allow customers of banks, utilities and telecommunications companies better access to their transactional data.”

Will this ever extend to Facebook and other similar platforms?

One of the key fundamentals of privacy protection is the use of identified vs de-identified data. The Facebook – CA issues is largely about how identified data was used inappropriately.

Market research and other similar sectors generally use de-identified data in analysis to protect respondent privacy. This includes data collected from surveys and other quantitative data, and focus groups and other qualitative data. Making storage, analysis and usage of data to avoid linking back to individuals is critical.

“De-identification means a process of ensuring that identifiable research information is rendered permanently non-identifiable, i.e. without retaining a means by which the information could be reasonably re-identified.” Privacy (Market and Social Research) Code 2014

Ultimately empowering the user is key. Over recent years there is increasing conversation about individuals owning their data, and the need for systems and regulations to better facilitate this. Potentially being able to sell one’s own data, likely de-identified, is worth exploring. Greater transparency of data and confidence is critical. Perhaps our next wave of innovation. Yet, the role of government and independent regulators is critical, as too many consumers have and always will be ignorant and vulnerable when it comes to their personal data, particularly when balancing the convenience and other benefits of digital applications.

Some interesting links ..

It is fascinating watching this unfold in discussion on Twitter, including for organisations to #DeleteFacebook …

Elon Musk deleted Facebook pages for Tesla and SpaceX in response to #DeleteFacebook

MARK ZUCKERBERG TALKS TO WIRED ABOUT FACEBOOK’S PRIVACY PROBLEM

Mark Zuckerberg: “One of the biggest mistakes” of Facebook is not digging deeper into Cambridge

HOW U.S. CORPORATIONS CAN AVOID CRIPPLING DATA BREACH FINES IN 2018 AND BEYOND?

The 17 biggest data breaches of the 21st century

Cambridge Analytica and its many scandals, explained

Facebook shares slide over 2 percent as Cambridge Analytica data breach fallout continues

This Is the Personal Data that Facebook Collects—And Sometimes Sells

Over the past few years one of my behind the scenes R&D projects with Square Holes has been investigating and experimenting with ways to use social data most effectively and ethically for research purposes. Way back in 2013 I presented at the the Australian Market and Social Research Society annual conference. My thinking has progressed greatly since, but you can have a look at the slides from 2013 here. A most fascinating topic. If you are interested in discussing further or sharing your thoughts, please get in touch.

Square Holes works to the highest privacy, ethical and quality standards, including the Association of Market and Social Research Organisations Trust Mark.

“Choosing an AMSRO Trust Mark research organisation ensures that the information gathered is accurate, ethical and meets privacy regulations. The Trust Mark is an assurance for any business and government decision-maker that they are buying research that is quality-tested and meets not only ethical standards but also goes over and above minimal privacy legislation.

Members awarded the Trust Mark must adhere to the following industry criteria:

- be a registered AMSRO member working under Australia’s first and only registered APP Industry Privacy Code

- be independently audited (annually) for certification to the International Market and Social Research Standard ISO 20252 (or ISO 26362)

- comply with the industry Code of Ethics.”

More via the AMSRO website and directory here.

Square Holes abides by the AMSRO Privacy Code 2014 for Market and Social Research; ISO 20252:2012 certified (Quality in Market and Social Research); Association of Market and Social Research Organisations (AMSRO) member since 2004 and endorsed by the Trustmark. Researchers members of Australian Market and Social Research Society (AMSRS), and bound by the Code of Professional Behaviour

Thank you so much for reading all….. the way to the end. Please have a lovely day. 🙂