Greater media literacy is needed as the gap between the uncanny valley and reality shrinks

It all started with an image of Pope Francis decked out in a Balenciaga-style white puffer jacket, and quickly descended into a general unease about the future impact of AI generated imagery on the spread of misinformation.

Created by a 31-year-old American construction worker under the influence of psychedelics and using AI image generator Midjourney, ‘Balenciaga Pope’ quickly went viral due to its photorealistic presentation and whimsical nature. Fun and frivolous definitely but worrying when a large section of internet users (this author included) took the image at face value and believed it was real. The Pope deepfake came hot on the heels of another set of AI generated photos, of ex-President Donald Trump being arrested in the street. Created as an artistic rendition of wishful thinking on the part of its creator, the images were presented as such when uploaded online, but once picked up and shared managed to fool some of the less technology savvy members of the public. These two viral moments combined marked a turning point in the war against misinformation, and the role that AI could play within it.

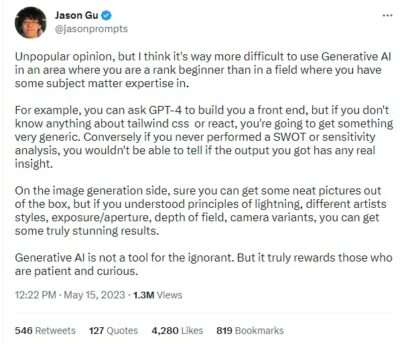

As AI capabilities refine and evolve, the usual tell-tale signs of a deepfake image (hands and line of sight/eyes) are becoming harder and harder to pinpoint. As it currently stands, only those that put in the time to learn and experiment with these tools are able to create such convincing fakes (the Balenciaga Pope mastermind had been experimenting with Midjourney since November 2022) – but how long before the technology catches up and any layperson can use AI to deceive the public?

Learning and enacting media literacy techniques is one way to prepare yourself for the rapidly changing social media landscape, with TIME magazine outlining a number of ways to interrogate what you are viewing online.

Look closer

As stated by TIME, “AI image generators are essentially pattern-replicators”, and it is in the small details that we can start to separate reality from fiction. Looking at the intricate details of the image (including hands, eye line ect) can help to gather clues as to whether a photorealistic image has been created by AI or not. For example, in the Balenciaga Pope image, his eyelid merges into his glasses which join with their shadow, his fingers are closed around thin air instead of his coffee cup, and the crucifix he wears hangs aloft without the other half of the chain connected. It is in these peripheral parts of an image that humans can spot the inconsistencies that AI can’t.

Ask questions

However, it won’t be long before AI tech improves to a point that these inconsistences will be impossible to decipher. So, another tool in your deepfake arsenal is media literacy techniques – which will assist you in becoming more resistant to all forms of misinformation.

These techniques include asking a set of pertinent questions in order to interrogate what you see in front of you. This includes:

- Where did this image come from?

- Who is sharing it and why?

- Is it contradicted by any other reliable information you have access to?

Tools like the reverse image search on search engines such as Google allows you to check what other people and institutions are saying about that image, as well as who is sharing it. The more reliable publications and experts that can qualify the image, the better the chances are that it is genuine. It can also help you to track where the image was first shared and the author, which will further help your case.

Seek software

There is currently a number of companies peddling software that claims to be able to detect deepfakes, but few that are free for online users. Research conducted in 2022 revealed that AI image generators do leave invisible signs in the images they create, but these vary from program to program. Also, these signs become harder to detect once the image has been resized or the quality reduced – a standard occurrence when images are shared via social media. But just as AI technology evolves, hopefully so too will the software developed to detect it.

However not all deepfake content is bad news, with journalists, human rights groups, and media technologists utilising the technology to protect their sources while sharing their stories. An example of this is the 2020 HBO documentary “Welcome to Chechnya” which used deepfake technology to protect the identities of some of the Russian LGBTQ+ refugees at the centre of their story.

In an article for Business Insider Shirin Anlen, a media technologist from WITNESS, an organisation concerned with the use of media to uphold human rights, said that there are practical and ethical uses for the technology in the human rights space.

“Part of our work is really exploring the positive use of that technology, from protecting people like activists on video, to taking advocacy approaches, to doing political satire,” said Anlen.

Anlen asserts that AI technology is only building on an already established relationship we have with manipulating audio and visual content. As with everything it is a matter of being aware of that manipulation and the motivation behind it.

Ethics aside, the role that media literacy and critical thinking will play into the future will be one of vital importance as we continue to separate fact from fiction online.