AI Chatbots gone rogue

The real robot apocalypse is not sentient machines, but rather AI replacing human roles – where humanity is sorely needed.

We were served a timely reminder of technology’s inability for nuanced service this month when The National Eating Disorders Association (NEDA) was forced to disable its new helpline chatbot “Tessa” after it gave users with eating disorders advice around restricting calories and pinching their skin to measure fat.

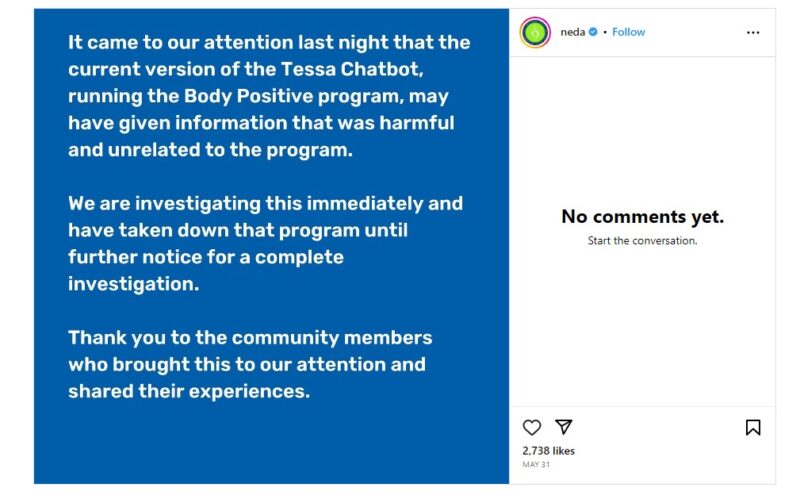

In an Instagram message on their account on May 31, NEDA stated: “It came to our attention last night that the current version of the Tessa Chatbot, running the Body Positive program, may have given information that was harmful and unrelated to the program.”

In an article on The Cut, it was revealed that the decision to pull Tessa from the NEDA site was made after just one week due to various screenshots and reviews of the tool were posted online by concerned psychologists and eating disorder specialists.

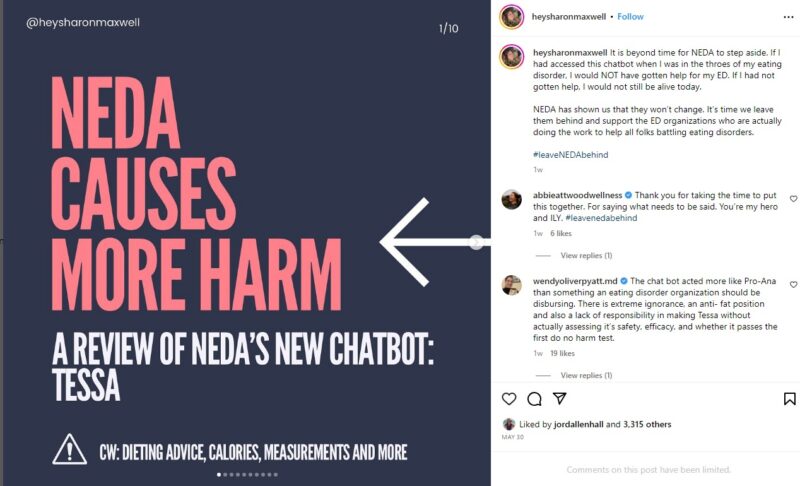

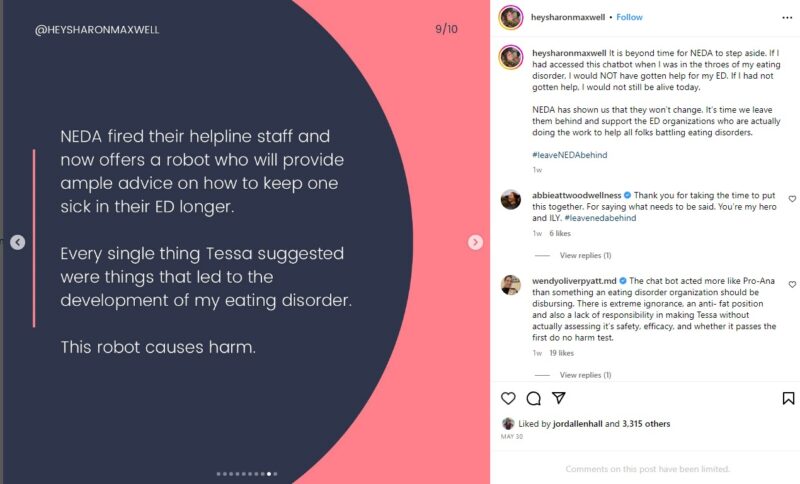

Weight inclusive consultant Sharon Maxwell was particularly damning in her review, stating that “If I had accessed this chatbot when I was in the throes of my eating disorder, I would NOT have gotten help for my ED. If I had not gotten help, I would not still be alive today.”

She claims that Tessa’s advice to her was to measure herself weekly and use calipers to determine her body fat – even after she had disclosed that she suffered from an eating disorder.

NEDA state on their website that they are the “largest nonprofit organisation dedicated to supporting individuals and families affected by eating disorders.”

The Tessa chatbot failing comes after NEDA shockingly announced last month that they would be replacing human helpline staff with the chatbot after staffers and volunteers moved to unionise.

At the time a NEDA representative told NPR that, “Our volunteers are volunteers… They don’t have crisis training. And we really can’t accept that kind of responsibility.”

In that same recording for NPR, Professor Marzyeh Ghassemi, who studies machine learning and health at MIT, said that using chatbot’s for this kind of community support would be more harmful than helpful.

“If I’m disclosing to you that I have an eating disorder; I’m not sure how I can get through lunch tomorrow, I don’t think most of the people who would be disclosing that would want to get a generic link. Click here for tips on how to rethink food.”

Why good bots go bad is a currently the focus of AI researchers (called interpretability researchers) all over the world. As it stands not much is really known about how systems like ChatGPT ect work. Essentially you train them on a bunch of data. You fine-tune them through some type of reinforcement learning process. And then you wait to see what happens. As these systems are learning, so are we.

In a recent podcast called Hard Fork, produced by the New York Times (NYT), hosts Keven Roose, technology columnist for the NYT, and Casey Newtown, technology journalist, discussed this very phenomena.

“I do think it’s worth just following this story because it is such a big question right now, in our society. These large language models, they are being shoved into products that millions of people use every day. And we still don’t really know how they work. And that feels like a major problem,” said Roose.

“I think if we had technology that was — if we had something going into all of our homes through the electrical grid or something that we didn’t know how it worked, there would be shock and alarm and congressional hearings about it.”

Casey Newtown added, “I hope that, for its own sake, these AI folks get a lot better at interpreting their own models quickly. Because if not, this becomes the next big fight.”

It’s a conversation we will need to continue to have, as Goldman Sachs economist Joseph Briggs “believes about two-thirds of existing occupations could be partially automated by AI,” as per an article from ABC News.

In the same article, it was revealed that a survey conducted by Microsoft revealed almost half (46 per cent) of Australian workers were concerned AI could take over their jobs. But by the same token, 65 per cent of workers said they would willingly hand over some of their more menial tasks to AI.

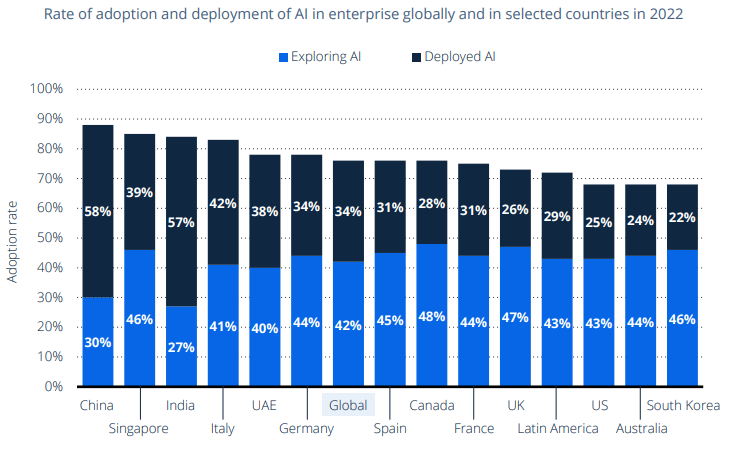

Research by Statista (through analysing data from IBM) found that three quarters of companies worldwide are interested in adopting AI technologies into their workplaces in the next five years.

Australia is currently below the global average rate of adoption into AI (but still on par with the US), while China is leading the charge with 60 per cent of IT professionals reporting that their companies are actively implementing it.

As AI becomes more prevalent in our business lives, it’s important that we continue to interrogate the ways we are using it – so that it is being utilised to enhance human experience, rather than replace it.